Introduction

Measurement plays an important role in our day to life, it helps us to understand and learn more things about our universe. The measurements are used to make things understandable and the art of measurement requires certain tools that help us to measure the dimensions of each and every object present around us. But with every measurement, there are chances of uncertainty known as error. These errors are reduced or removed by taking the measurements precisely and accurately. Thus we can say that precision and accuracy are two important factors that make measurement a standard and an accepted value.

Accuracy

Accuracy can be defined as the ability or consistency of an instrument to take the same value over and over again without any error. In other words we can say that accuracy is the closeness of a value to the reference or a standard value. The errors are reduced by taking the small measurements which in turn increases the accuracy of a measurement. The accuracy can be classified as follows:

1. Point accuracy

It is the accuracy of any instrument at a given point on a scale. This type of accuracy does not give us any information regarding the instrument. This type of measurement is taken at a single point only.

2. Accuracy as percentage of scale range

The constant scale range gives the accuracy of measurement. Every scale has a given range of value that depends upon its accuracy.

3. Accuracy as percentage of true value

This type of accuracy of an instrument is taken by comparing the measured value with their standard value. The values upto the value of 0.5% are considered negligible.

Precision

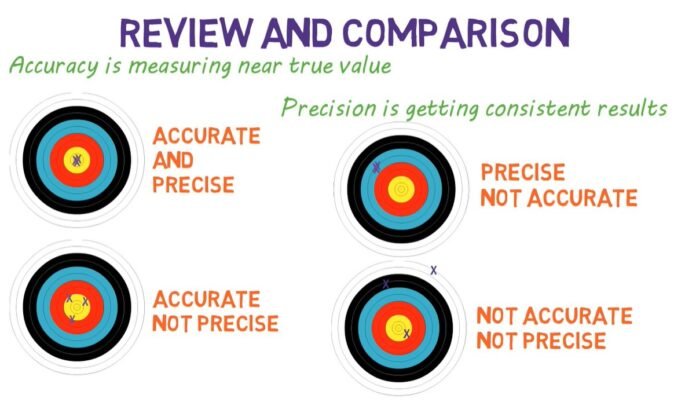

The measurements are said to be precise when two or more measurements are very close to one another. In other words, we can say that precision is the closeness of various measurements to one another. Precision and accuracy are two independent quantiles. A measurement can be precise but not accurate or vice versa. Precision can be divided into:

· Repeatability: it can be summarized as the change or variation produced when back to back measurements are taken in short span of time keeping the conditions same throughout.

· Reproducibility: it can be summarized as the changes produced due to the use of different working or measuring methods and instruments as well as different operators taking the measurements. These measurements are taken over a long period of time.

Examples of precision and accuracy

Accuracy and precision can be understood by taking the example of an archer hitting the target. If the archer hits the target at different points, it is said to be accurate but not precise. An archer who constantly hits the same point again and again is said to be precise. Thus a precise archer will hit the same point again and again compared to an accurate archer who hits the target at different points.

The measurements taken by a scale close to the mass of the body for a particular substance are said to be accurate but not precise. If you take a measurement of a mass of 15 kg and get the values of 13.1, 13.3, 13 and 13.15, the scale is said to be precise but not accurate compared to a scale which weighs 15.1,14.9,15.2, it is said to be more accurate than the first scale but less precise.

Difference between Accuracy and Precision

In the previous few sections having discussed what each term means, let us now look at their differences.

| Accuracy | Precision |

|---|---|

| It determines the difference between the actual value and the standard value. | It gives the variation that lies between a set of values or measurements taken for a particular substance or factor. |

| It is the comparison between the results obtained with the standard value. | Represents how closely results agree with one another. |

| Only one measurement is required for accuracy. | More than one measurement is required to say anything about precision. |

| Measurements can be accurate, sometimes same as guess or fluke. But to be accurate constantly, it need to be precise as well as accurate | Results can be precise without being accurate. Alternatively, the results can be precise and accurate. |

False precision

Madsen prime has defined “False Precision” as, when the exact numbers are used to predict or express a particular measurement but cannot be expressed in exact terms. In the scientific community, there is a criterion which states that all the non-zero digits of a number are meaningful. Hence, if one falsely provides excessive figures, it may lead the evaluators to expect better precision than really found in the experiment. In the scientific community, there is a criteria which states that all the non-zero digits of a number are meaningful. Hence, if one falsely provides excessive figures, it may lead the evaluators to expect better precision than really found in the experiment.

Recommended Articles:

Accuracy Precision Error Measurement

Principle & Working Of Accelerometer

Acceleration – Introduction, Relationship & Applications

Acceleration Time Graph – Understanding with examples

Acceleration Inclined Plane: Introduction, Laws and Calculating

It refers to the nearness of the measured values to that of the absolute value. Accuracy can be classified as following: Point accuracy Accuracy as the percentage of scale range Accuracy as the percentage of true value. It is the difference between the obtained values to that of the actual value. Error can be positive as well as negative. It is the accuracy of any instrument at a specified point on a scale. It varies from scale to scale. The results obtained can be accurate as well as precise. Accuracy and precision measurement FAQs

What is meant by accuracy?

What is the classification of accuracy of the system?

What is Error?

What do you mean by point accuracy?